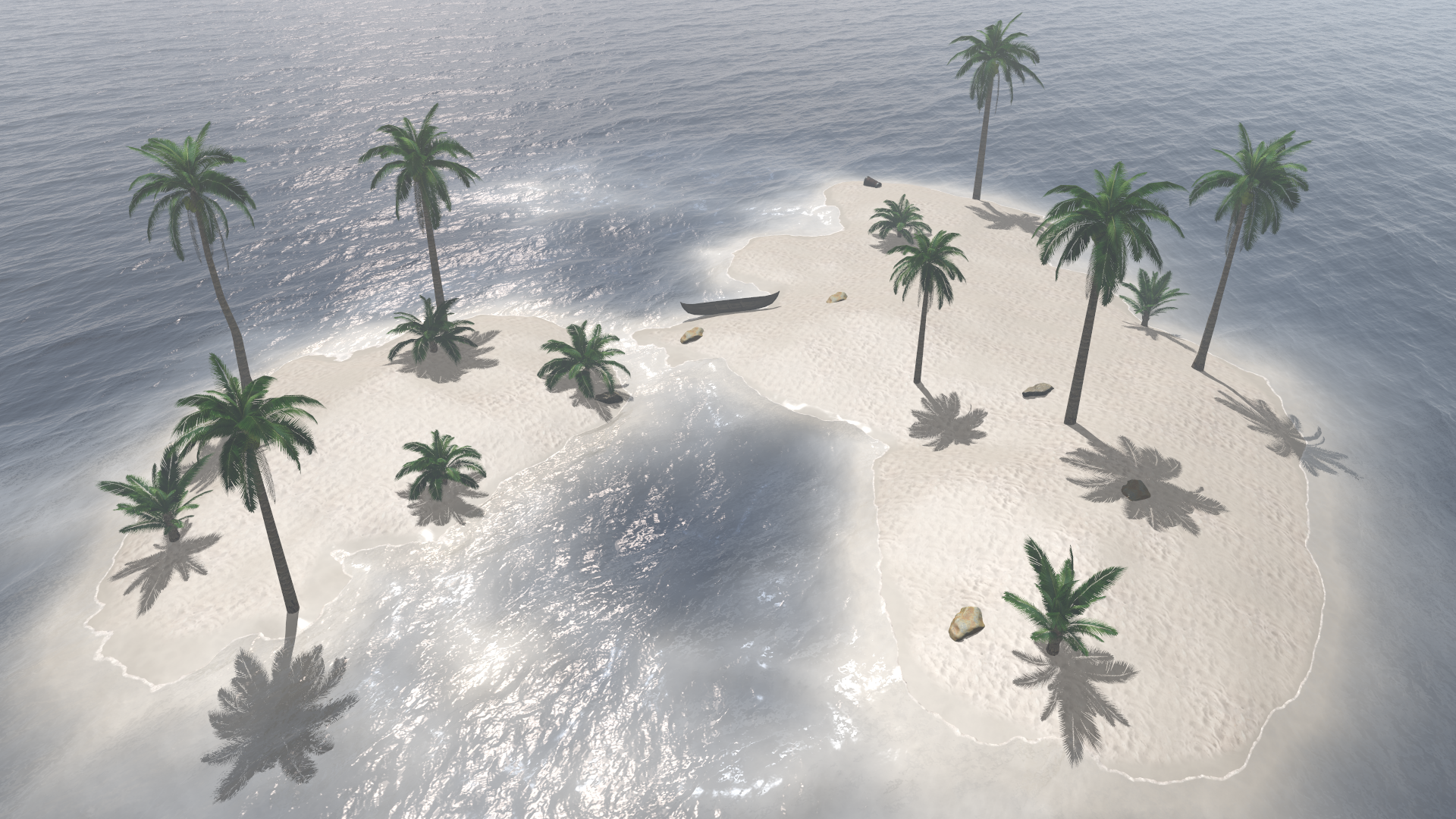

Interactive WebAssembly Version

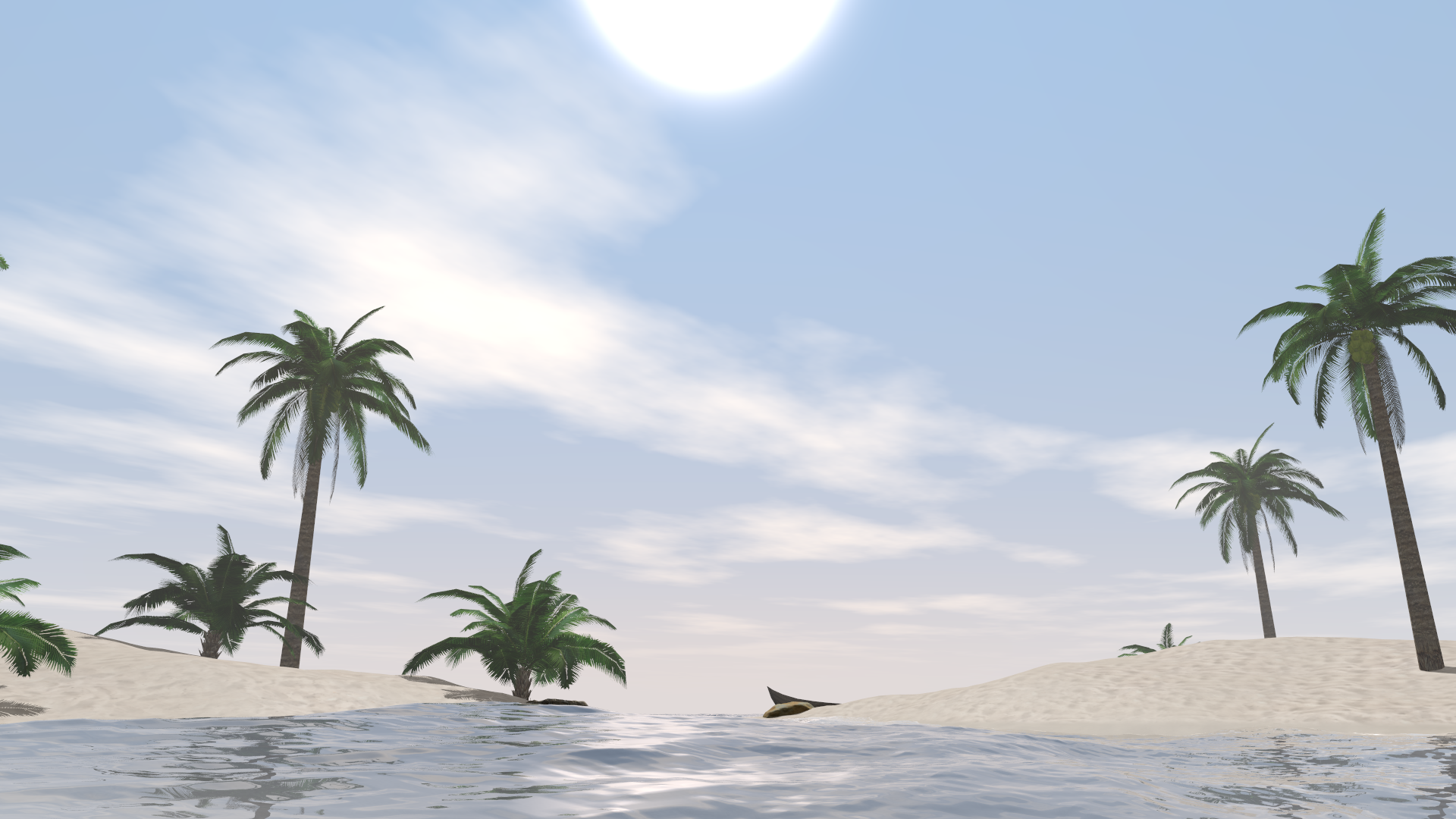

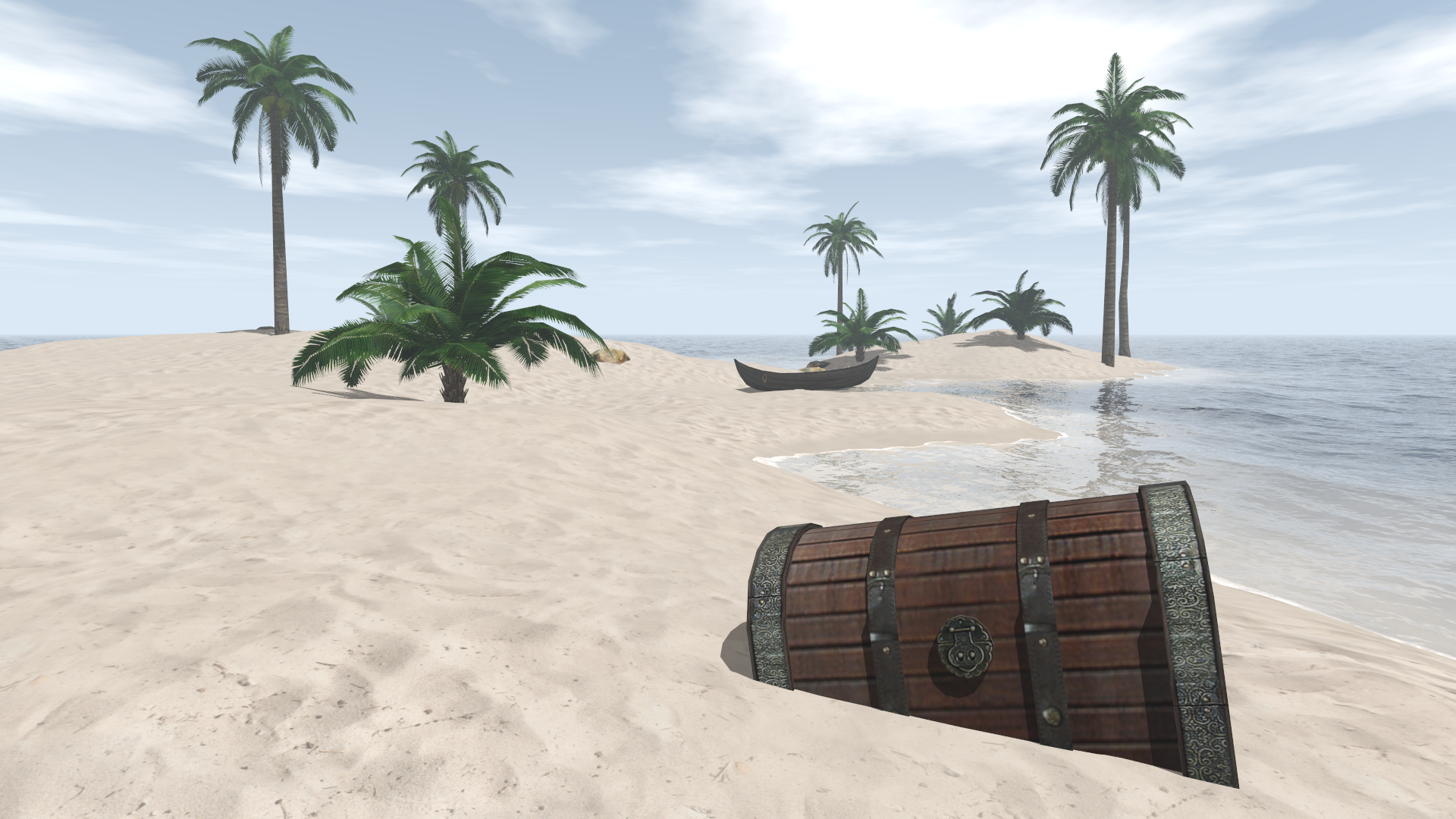

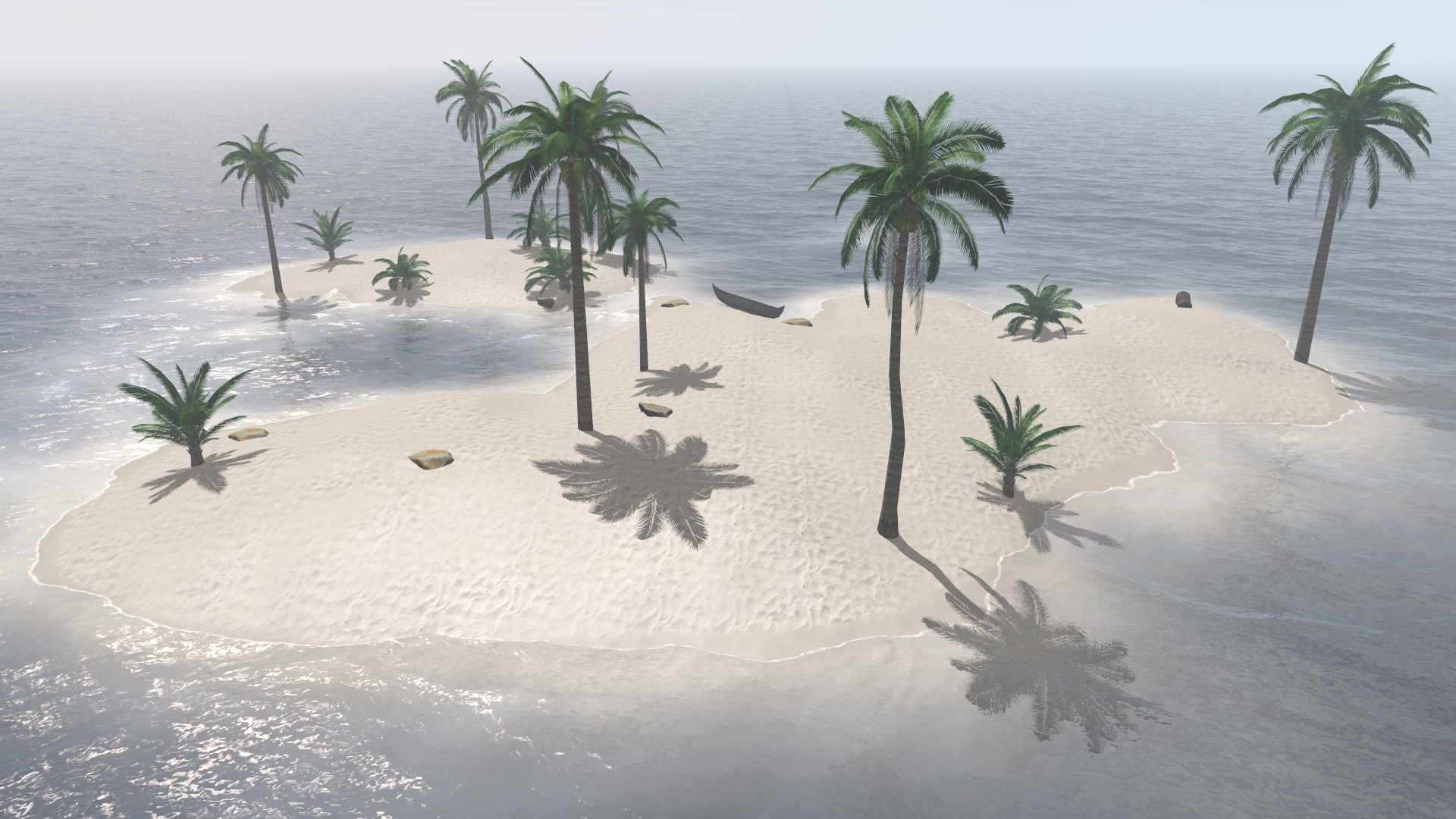

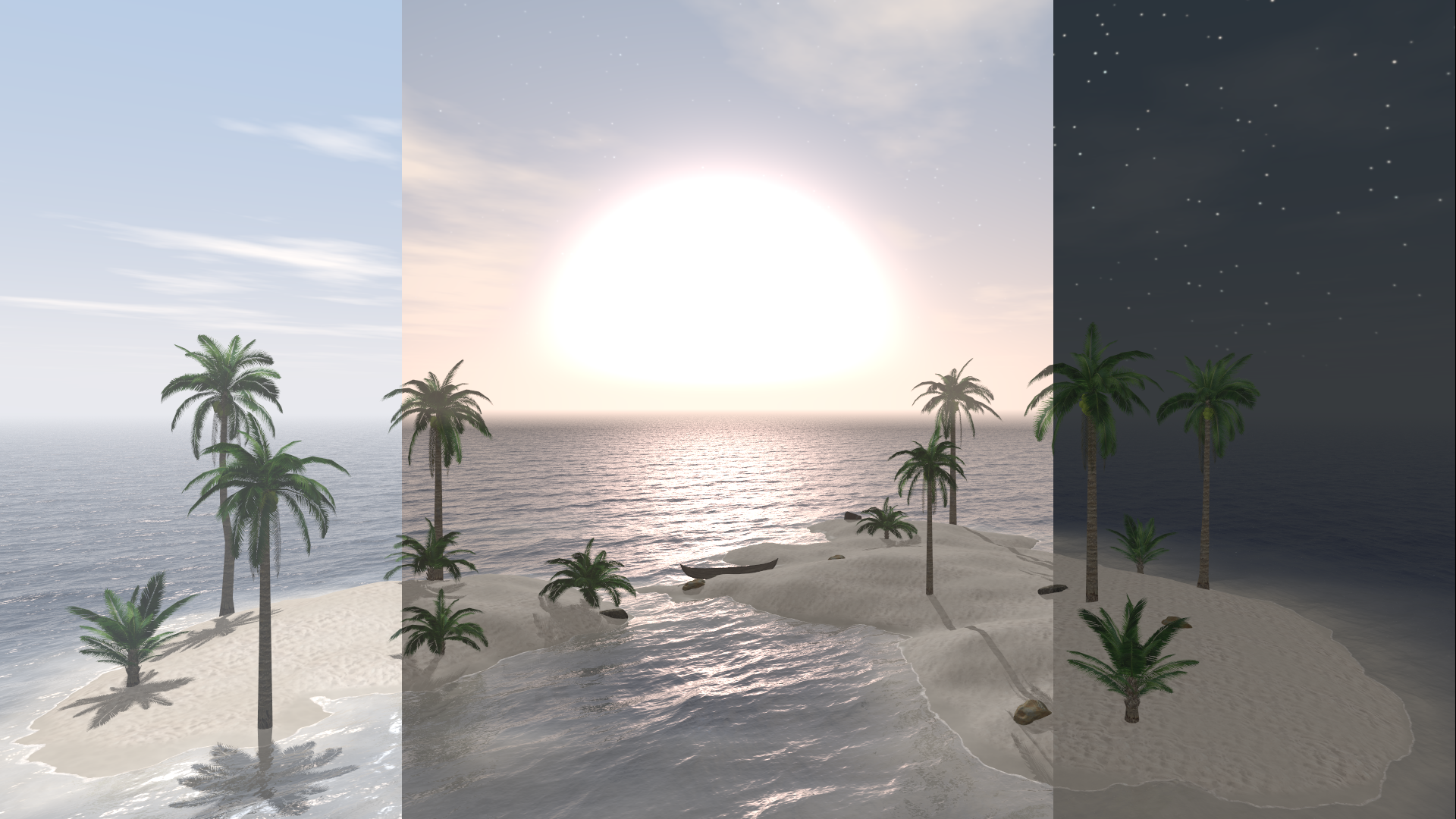

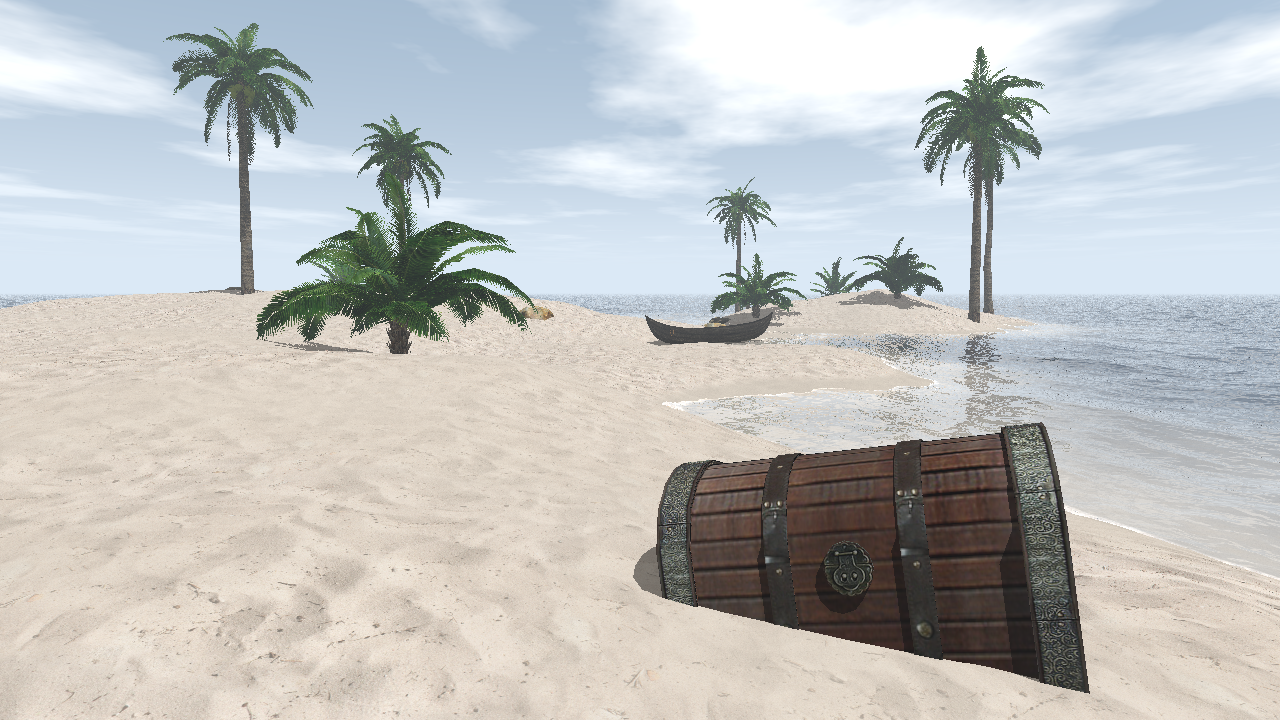

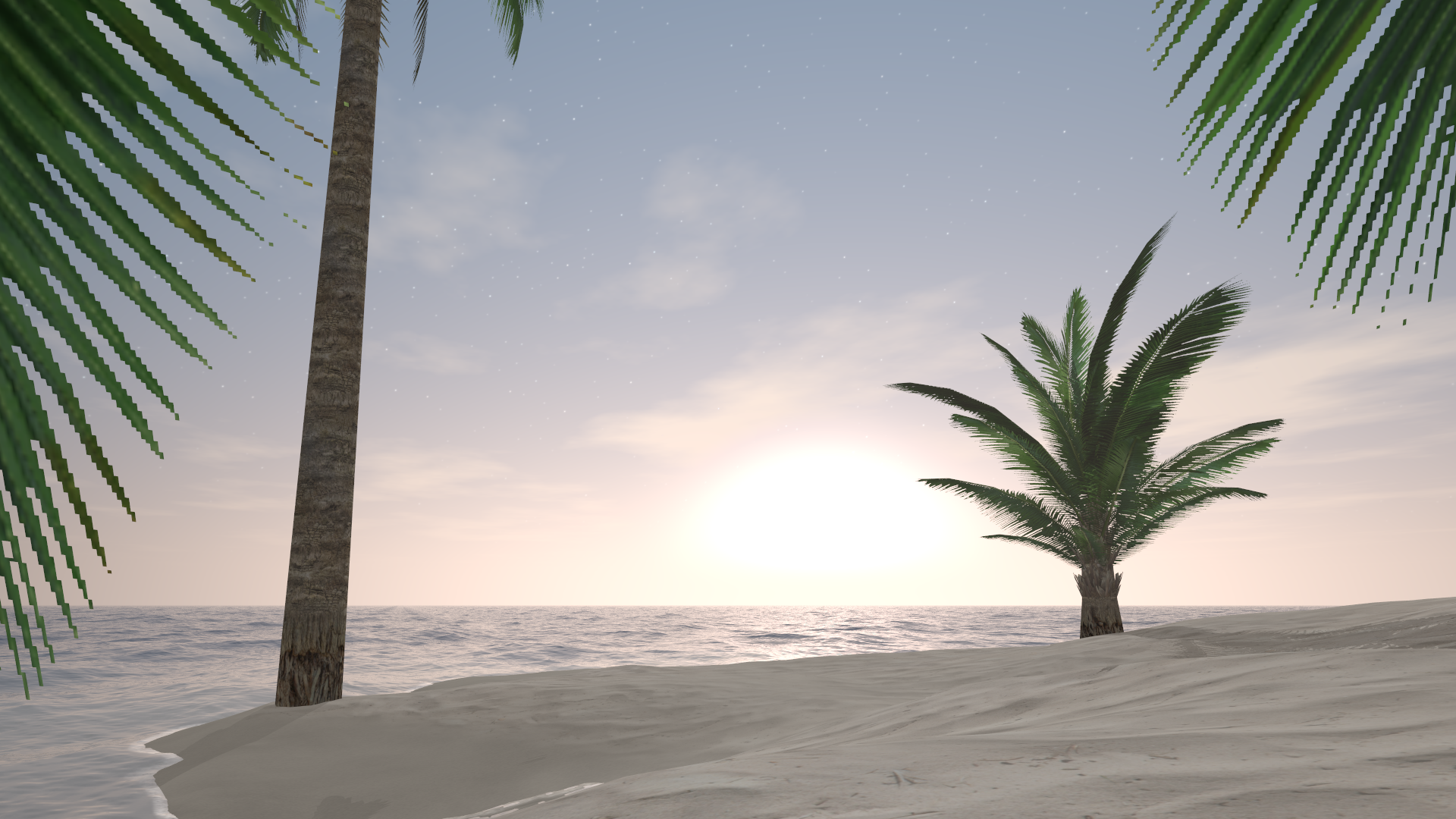

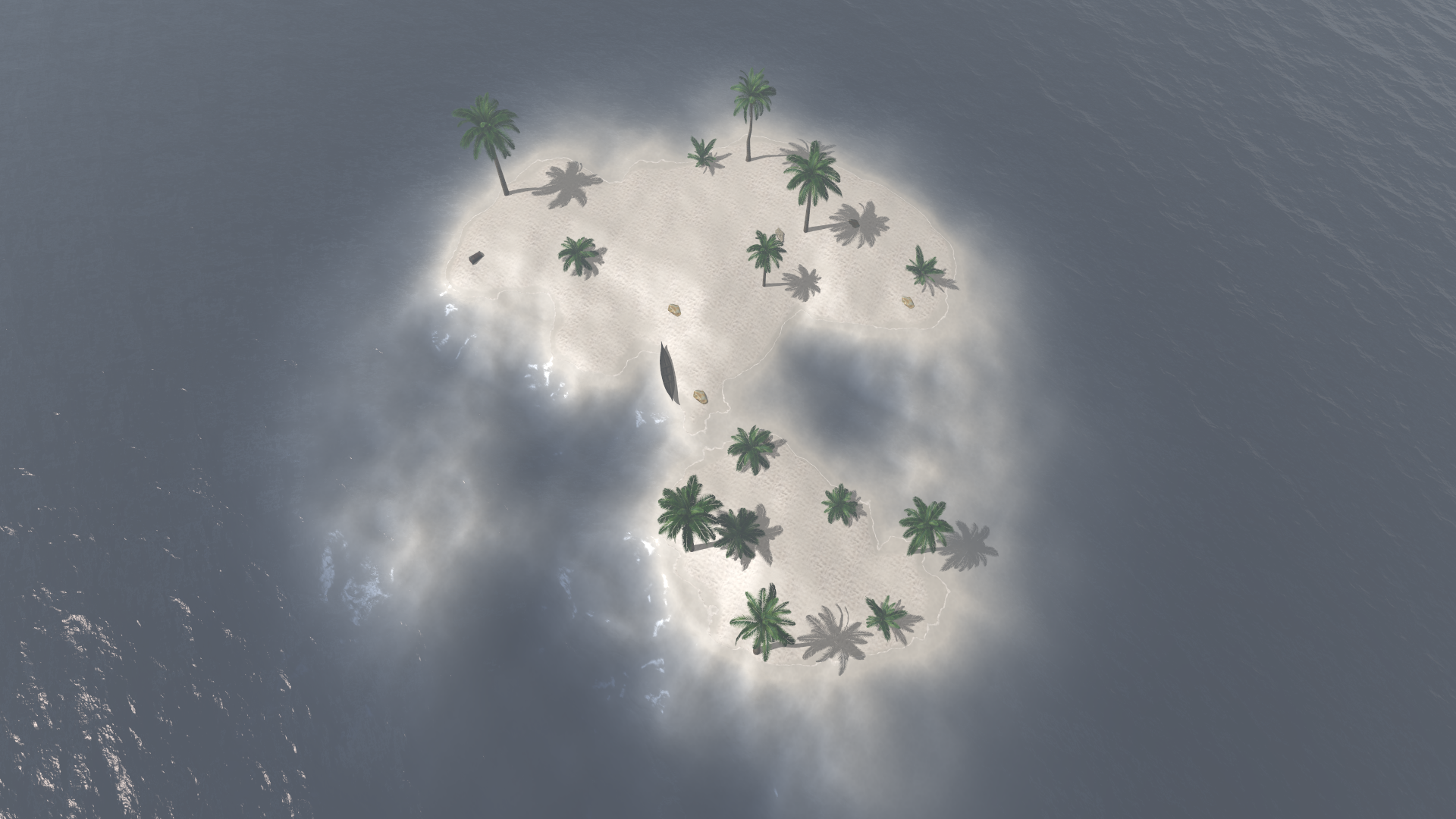

One says an image is worth more than thousand words. Pursuing this path even further, I thought that running the ray tracer at interactive framerates would be worth more than a thousand images. With the help of Emscripten, I ported the engine to WebAssembly. In order to make use of multiple cores, the wrapper creates multiple web workers that receive jobs from the main program. When standing still, a high-quality (720p) rendering is triggered.

Warning: Running this may crash your browser if your machine is not powerful enough.

| Action: | Move | Height | Time | Randomize |

| Keys: | WASD |

EQ |

RF |

G |

Note that this version consumes way more memory than the native one, since each web worker

has to load the scene and textures independently. I could have used

SharedArrayBuffer to reduce memory usage (and probably improve performance

a little bit), however this is currently disabled by default on most browsers due to

security reasons.